January 17th, 2025

3 min read

The risks of artificial intelligence are all too real.

As AI becomes a part of everyday life, it’s creating breakthroughs in deep learning, data-driven decision-making and problem-solving. And it offers huge potential. AI could soon play a major role in improving health, wellbeing and prosperity for society.

But with that potential comes some serious worries. Will AI lead to discrimination, unjust restrictions, copyright issues or deep fakes? How can privacy and security be maintained? These challenges, and more, underline why it’s crucial for businesses to have strong governance frameworks in place to help manage the risks and ensure accountability for AI systems, developers and users.

The CEO Perspective on AI Governance

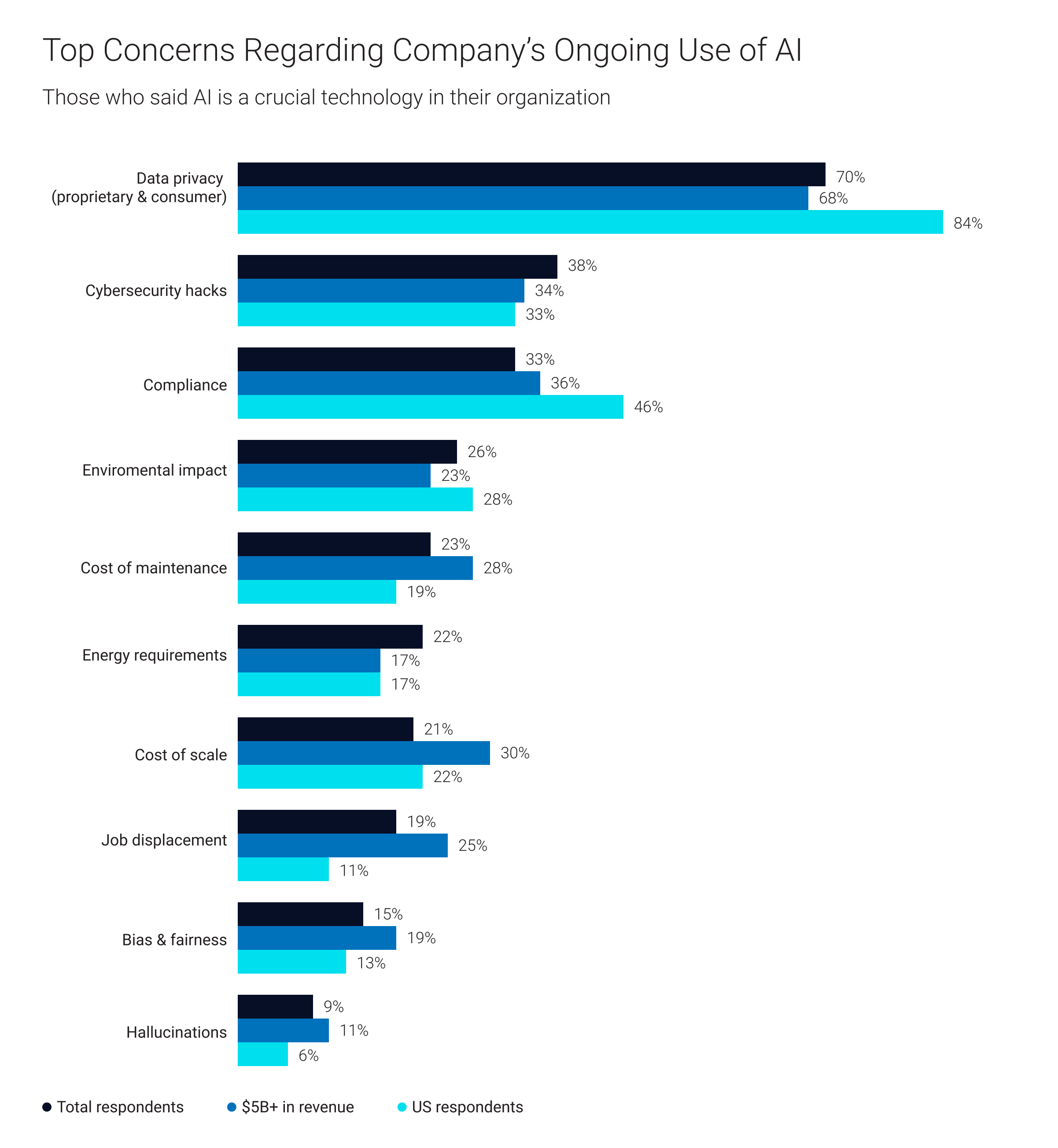

WSJ Intelligence and NTT recently conducted a global study of CEOs who are leading the adoption of AI-driven, sustainable and advanced connectivity technologies, and found that many are concerned about the potential impact of AI. Data privacy is a particular issue: globally, seven out of 10 (70%) of those who said AI is a crucial technology in their firm named data privacy as their top concern. That number rises to 84% among CEOs based in the U.S. CEOs and executives surveyed also noted cybersecurity, compliance issues, environmental impact and cost of maintenance among the primary challenges worrying them. Some also reported concerns about job displacement as well as bias and fairness.

When asked about the most important challenges facing the adoption of safe, secure and trustworthy AI for society, executives from larger companies and U.S. firms were most likely to report concerns about the dangers of overdependence on AI, fearing it could erode critical thinking and creativity within the workforce. A full 84% of respondents said they anticipate AI technologies like large language models (LLMs) to become increasingly personalized, potentially heightening these issues. In addition, sustainability is an issue, with 85% of respondents expressing both interest in using AI and concerns about its growing energy consumption.

At the same time, 87% of CEOs in the survey noted an urgent necessity for robust governance and risk management frameworks to ensure the safe and responsible development of AI. In other words, CEOs recognize that AI brings risks, but they also understand that clear ethical guidelines for AI governance are essential for mitigating those risks.

How NTT’s AI Charter Informs Our Approach

Despite the consensus the survey revealed around the need for guidelines, not every company’s approach will be the same. Governance frameworks must be customized to meet the unique needs of each organization. For some, this means overseeing third-party AI tools, while for others it involves coordinating AI efforts across various business units.

At NTT, we see important opportunities to embed AI more deeply into business and society, with benefits for all. Our approach to AI is built around the six principles that make up our AI Charter:

-

Enabling

sustainable development

-

Human

autonomy

-

Ensuring

fairness and openness

-

Security

-

Privacy

-

Communication

& co-creation with society

Investing in Sustainable and Risk Management Technology

To support this growing focus on ethical AI governance, it is expected that spending on sustainable technology and risk management solutions will increase by at least 10% in 2025 among the majority of respondents.

Sustainable tech and risk management solution spending to grow by at least 10%

The WSJ Intelligence & NTT Global Study shows that nearly one in four CEOs (22%) is worried about AI’s energy needs. Generative AI models, in particular, require significant computing resources, consuming up to 33 times more energy for a task than traditional, task-specific software[1]. Executives are concerned about balancing these sustainability issues with technological deployment, and are seeking to invest in technology that can minimize energy consumption.

At the same time, growth in risk management investments indicates a proactive approach to asset protection, which is critical given the ever-growing costs of resolving data breaches. With this in mind, companies’ cyber spending is already higher than ever, totaling $219 billion spent on legacy solutions such as firewalls and VPNs[2]. This figure is expected to increase as generative AI advances alongside continuing adoption of cloud services, growth of hybrid workplaces, and evolving regulatory landscapes.

Looking to the future

The WSJ Intelligence & NTT Global Study reveals that company executives see AI as far more than a passing trend; in fact, most recognize it as key to the future success of their businesses. It can help to improve operations and drive profitability potential, and many of those surveyed see AI as a powerful catalyst that can generate substantial value.

But they recognize the challenges of AI, too: risks that include high energy consumption, privacy and security concerns, potential biases in AI models and the danger of human overreliance on automated systems. As executives seek to balance the benefits of AI with its challenges and risks, clear guidelines and ethical governance will chart the way.

About the WSJ Intelligence and NTT Global Study

NTT partnered with WSJ Intelligence to examine a global study of CEOs, seeking insights on the adoption and ethical deployment of these powerful technologies to build a future-ready enterprise.

This was a global quantitative study of n=351 CEOs from select industries with at least $1B in annual revenue in the U.S., $500M internationally. Most are within tech, manufacturing or financial services and come from high-revenue firms with at least $1B in annual sales. The survey ran in November 2024.

Sources:

[1] Luccioni, Alexandra Sasha, Yacine Jernite, and Emma Strubell. “Power Hungry Processing: Watts Driving the Cost of AI Deployment?” ArXiv (Cornell University), November 2023.

[2] Chaudhry, Jay. “Why Cybersecurity and Risk Management Are Crucial for Growth.” World Economic Forum. January 9, 2024.